Perform the fastest-ever image segmentation in Android

Image Segmentation is one of most useful feature that ML has provided us. Have you ever wondered how the ‘blur background’ feature works in Zoom, Google Meet or Microsoft Teams? How can a machine realize the difference between a person and the background without any depth perception?

That’s where image segmentation comes into the picture. This problem has been in active research and as a result we have some of the SOTA DL methods to provided precise pixel-level segmentations. These models are huge and have a larger number of layers with millions of parameters. Running these models on any edge device, which has limited battery life and computational resources, can be a challenge. MLKit, the latest ML package from Google, can save us from the tedious optimization we need to perform to make these models lite and super-fast.

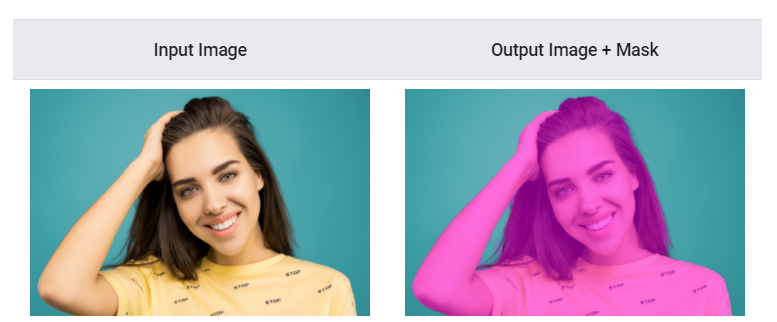

MLKit has a Selfie Segmentation feature, which is in beta-stage currently ( as of August 2021 ) but we’ll use it on an Android device to perform image segmentation faster than ever. Here’s an example on how it will look while running on an Android device.

We’ll go through a step-by-step explanation on how to implement this app in Android. The source code could found in this GitHub repo.

View all my stories at -> https://gist.github.com/shubham0204/94c53703eff4e2d4ff197d3bc8de497f

💡 Knowing the Basics

The story expects some understanding of image segmentation from the readers. If you’re new to the concept of image segmentation, you may refer to some of these resources,

📑 Contents

- Adding the dependencies ( CameraX & MLKit ) to

build.gradle - Adding the

PreviewView and initializing the camera feed - Creating an overlay to display the segmentation

- Getting live camera frames using

ImageAnalysis.Analyser - Setting up MLKit’s

Segmentor on the live camera feed - Drawing the Segmentation Bitmap on the

DrawingOverlay

1. 🔨 Adding the dependencies ( CameraX & MLKit ) to build.gradle

In order to add the MLKit Selfie Segmentation feature to our Android app, we need to add a dependency to our build.gradle ( app-level ) file.

| dependencies { | |

| implementation 'androidx.core:core-ktx:1.6.0' | |

| implementation 'androidx.appcompat:appcompat:1.3.1' | |

| implementation 'com.google.android.material:material:1.4.0' | |

| implementation 'androidx.constraintlayout:constraintlayout:2.1.0' | |

| // Other dependencies | |

| // Add the selfie segmentation dependency | |

| implementation 'com.google.mlkit:segmentation-selfie:16.0.0-beta2' | |

| } |

Note, make sure to use the latest release of the Selfie Segmentation package.

As we’ll perform image segmentation on the live camera feed, we’ll also require a camera library. So, in order to use CameraX, we add the following dependencies in the same file.

| dependencies { | |

| implementation 'androidx.core:core-ktx:1.6.0' | |

| implementation 'androidx.appcompat:appcompat:1.3.1' | |

| implementation 'com.google.android.material:material:1.4.0' | |

| implementation 'androidx.constraintlayout:constraintlayout:2.1.0' | |

| // Other dependencies | |

| // Add the selfie segmentation dependency | |

| implementation 'com.google.mlkit:segmentation-selfie:16.0.0-beta2' | |

| // CameraX dependencies | |

| implementation "androidx.camera:camera-camera2:1.0.0" | |

| implementation "androidx.camera:camera-lifecycle:1.0.0" | |

| implementation "androidx.camera:camera-view:1.0.0-alpha26" | |

| implementation "androidx.camera:camera-extensions:1.0.0-alpha26" | |

| } |

Note, make sure to use the latest release of the CameraX package.

Build and sync the project to make sure we’re to go!

2. 🎥 Adding the PreviewView and initializing the camera feed

In order to display the live camera feed to the user, we’ll use PreviewViewfrom the CameraX package. We’ll require minimum setup to get a camera live feed running because of PreviewView .

Now, head on to activity_main.xml and delete the TextView which is present there ( the default TextView showing ‘Hello World’ ). Next, add PreviewView in activity_main.xml.

| <?xml version="1.0" encoding="utf-8"?> | |

| <androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android" | |

| xmlns:app="http://schemas.android.com/apk/res-auto" | |

| xmlns:tools="http://schemas.android.com/tools" | |

| android:layout_width="match_parent" | |

| android:layout_height="match_parent" | |

| tools:context=".MainActivity"> | |

| <androidx.camera.view.PreviewView | |

| android:id="@+id/camera_preview_view" | |

| android:layout_width="0dp" | |

| android:layout_height="0dp" | |

| app:layout_constraintBottom_toBottomOf="parent" | |

| app:layout_constraintEnd_toEndOf="parent" | |

| app:layout_constraintStart_toStartOf="parent" | |

| app:layout_constraintTop_toTopOf="parent" /> | |

| </androidx.constraintlayout.widget.ConstraintLayout> |

We need to initialize the PreviewView in MainActivity.kt , but first we need to add the CAMERA permission to AndroidManifest.xml like below,

| <?xml version="1.0" encoding="utf-8"?> | |

| <manifest xmlns:android="http://schemas.android.com/apk/res/android" | |

| package="com.shubham0204.selfiesegmentation"> | |

| <!-- Add the camera permission --> | |

| <uses-permission android:name="android.permission.CAMERA"/> | |

| <application | |

| android:allowBackup="true" | |

| android:icon="@mipmap/ic_launcher" | |

| android:label="@string/app_name" | |

| android:roundIcon="@mipmap/ic_launcher_round" | |

| android:supportsRtl="true" | |

| android:theme="@style/Theme.SelfieSegmentation"> | |

| <activity | |

| android:name=".MainActivity" | |

| android:exported="true"> | |

| <intent-filter> | |

| <action android:name="android.intent.action.MAIN" /> | |

| <category android:name="android.intent.category.LAUNCHER" /> | |

| </intent-filter> | |

| </activity> | |

| </application> | |

| </manifest> |

Now, open up MainActivity.kt and in the onCreate method, check if the camera permission is granted, if not, request it from the user. To provide a full screen experience to the user, remove the status bar as well. Also, initialize the PreviewView we created in activity_main.xml.

| override fun onCreate(savedInstanceState: Bundle?) { | |

| super.onCreate(savedInstanceState) | |

| // Remove the status bar | |

| window.decorView.systemUiVisibility = View.SYSTEM_UI_FLAG_FULLSCREEN | |

| setContentView(R.layout.activity_main) | |

| previewView = findViewById( R.id.camera_preview_view ) | |

| if (ActivityCompat.checkSelfPermission( this , Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) { | |

| requestCameraPermission() | |

| } | |

| else { | |

| // Permission granted, start the camera live feed | |

| setupCameraProvider() | |

| } | |

| } |

To request the camera permission, we’ll use ActivityResultContracts.RequestPermission so that the request code is automatically handled by the system. If the permission is denied, we’ll display an AlertDialog to user,

| // <-------- MainActivity.kt ----------- > | |

| private fun requestCameraPermission() { | |

| requestCameraPermissionLauncher.launch( Manifest.permission.CAMERA ) | |

| } | |

| private val requestCameraPermissionLauncher = registerForActivityResult( | |

| ActivityResultContracts.RequestPermission() ) { | |

| isGranted : Boolean -> | |

| if ( isGranted ) { | |

| setupCameraProvider() | |

| } | |

| else { | |

| val alertDialog = AlertDialog.Builder( this ).apply { | |

| setTitle( "Permissions" ) | |

| setMessage( "The app requires the camera permission to function." ) | |

| setPositiveButton( "GRANT") { dialog, _ -> | |

| dialog.dismiss() | |

| requestCameraPermission() | |

| } | |

| setNegativeButton( "CLOSE" ) { dialog, _ -> | |

| dialog.dismiss() | |

| finish() | |

| } | |

| setCancelable( false ) | |

| create() | |

| } | |

| alertDialog.show() | |

| } | |

| } |

Wondering what the setupCameraProvider method will would do? It simply starts the live camera feed using the PreviewView , which we initialized earlier and a CameraSelector object,

| class MainActivity : AppCompatActivity() { | |

| ... | |

| private lateinit var previewView : PreviewView | |

| private lateinit var cameraProviderListenableFuture : ListenableFuture<ProcessCameraProvider> | |

| ... | |

| override fun onCreate(savedInstanceState: Bundle?) { | |

| super.onCreate(savedInstanceState) | |

| ... | |

| previewView = findViewById( R.id.camera_preview_view ) | |

| ... | |

| } | |

| private fun setupCameraProvider() { | |

| cameraProviderListenableFuture = ProcessCameraProvider.getInstance( this ) | |

| cameraProviderListenableFuture.addListener({ | |

| try { | |

| val cameraProvider: ProcessCameraProvider = cameraProviderListenableFuture.get() | |

| bindPreview(cameraProvider) | |

| } | |

| catch (e: ExecutionException) { | |

| Log.e("APP", e.message!!) | |

| } | |

| catch (e: InterruptedException) { | |

| Log.e("APP", e.message!!) | |

| } | |

| }, ContextCompat.getMainExecutor( this )) | |

| } | |

| private fun bindPreview(cameraProvider: ProcessCameraProvider) { | |

| val preview = Preview.Builder().build() | |

| val cameraSelector = CameraSelector.Builder() | |

| .requireLensFacing(CameraSelector.LENS_FACING_FRONT) | |

| .build() | |

| preview.setSurfaceProvider(previewView.surfaceProvider) | |

| val displayMetrics = resources.displayMetrics | |

| val screenSize = Size( displayMetrics.widthPixels, displayMetrics.heightPixels) | |

| val imageAnalysis = ImageAnalysis.Builder() | |

| .setTargetResolution( screenSize ) | |

| .setBackpressureStrategy(ImageAnalysis.STRATEGY_KEEP_ONLY_LATEST) | |

| .build() | |

| cameraProvider.bindToLifecycle( | |

| (this as LifecycleOwner), | |

| cameraSelector, | |

| preview | |

| ) | |

| } | |

| } |

Now, run the app on a device/emulator and grant the camera permission to the app. The camera feed should run as expected. This completes half of our journey, as we still have to display a segmentation map to the user.

3. 📱 Creating an overlay to display the segmentation

In order to display the segmentation over the live camera feed, we’ll need a SurfaceView which will be placed over the PreviewView in activity_main.xml . The camera frames ( as Bitmap ) will be supplied to the overlay so that they could be drawn over the live camera feed. To start, we create a custom View called DrawingOverlay which inherits SurfaceView.

| class DrawingOverlay(context : Context, attributeSet : AttributeSet) : SurfaceView( context , attributeSet ) , SurfaceHolder.Callback { | |

| var maskBitmap : Bitmap? = null | |

| override fun surfaceCreated(holder: SurfaceHolder) { | |

| TODO("Not yet implemented") | |

| } | |

| override fun surfaceChanged(holder: SurfaceHolder, format: Int, width: Int, height: Int) { | |

| TODO("Not yet implemented") | |

| } | |

| override fun surfaceDestroyed(holder: SurfaceHolder) { | |

| TODO("Not yet implemented") | |

| } | |

| override fun onDraw(canvas: Canvas?) { | |

| // Draw the segmentation here | |

| } | |

| } |

We’ll add the above View element in activity_main.xml.

| <?xml version="1.0" encoding="utf-8"?> | |

| <androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android" | |

| xmlns:app="http://schemas.android.com/apk/res-auto" | |

| xmlns:tools="http://schemas.android.com/tools" | |

| android:layout_width="match_parent" | |

| android:layout_height="match_parent" | |

| tools:context=".MainActivity"> | |

| <androidx.camera.view.PreviewView | |

| ... | |

| /> | |

| <com.shubham0204.selfiesegmentation.DrawingOverlay | |

| android:id="@+id/camera_drawing_overlay" | |

| android:layout_width="0dp" | |

| android:layout_height="0dp" | |

| app:layout_constraintBottom_toBottomOf="parent" | |

| app:layout_constraintEnd_toEndOf="@+id/camera_preview_view" | |

| app:layout_constraintStart_toStartOf="parent" | |

| app:layout_constraintTop_toTopOf="parent" /> | |

| </androidx.constraintlayout.widget.ConstraintLayout> |

Code Snippet 9: Adding DrawingOverlay to activity_main.xml

Job Offers

Also, we need to initialize the DrawingOverlay in the MainActivity.ktwhich we will help us connect it with the live camera feed:

| override fun onCreate(savedInstanceState: Bundle?) { | |

| super.onCreate(savedInstanceState) | |

| ... | |

| drawingOverlay = findViewById( R.id.camera_drawing_overlay ) | |

| drawingOverlay.setWillNotDraw(false) | |

| drawingOverlay.setZOrderOnTop(true) | |

| ... | |

| } |

4. 🎦 Getting live camera frames using ImageAnalysis.Analyser

In order to perform segmentation and display the output to the user, we first need a way to get the camera frames from the live feed. Going through the CameraX documentation, you’ll notice that we have to use ImageAnalysis.Analyser in order to get the camera frames as android.media.Image which can be converted to our favorite Bitmaps.

We then create a new class, FrameAnalyser.kt which inherits ImageAnalysis.Analyser and takes the DrawingOverlay as an argument in its constructor. We’ll discuss this in the next section, as this will help us connect the DrawingOverlay with the live camera feed.

| class FrameAnalyser( private var drawingOverlay: DrawingOverlay) : ImageAnalysis.Analyzer { | |

| private var frameMediaImage : Image? = null | |

| override fun analyze(image: ImageProxy) { | |

| frameMediaImage = image.image | |

| if ( frameMediaImage != null) { | |

| // Process the image here ... | |

| } | |

| } | |

| } |

5. 💻 Setting up MLKit’s Segmentor on the live camera feed

We’ll finally initialize Segmentor which will segment the images from us. For every image-based service in MLKit, you need to convert the input image ( which can be a Bitmap , InputStream or Image ) to InputImagewhich comes from the MLKit package. All the above mentioned logic will be executed in FrameAnalyser's analyse() method. We’ll use the InputImage.fromMediaImage method to directly use the Image object provided by the analyse method.

| class FrameAnalyser( private var drawingOverlay: DrawingOverlay) : ImageAnalysis.Analyzer { | |

| // Initialize Segmentor | |

| private val options = | |

| SelfieSegmenterOptions.Builder() | |

| .setDetectorMode( SelfieSegmenterOptions.STREAM_MODE ) | |

| .build() | |

| val segmenter = Segmentation.getClient(options) | |

| private var frameMediaImage : Image? = null | |

| override fun analyze(image: ImageProxy) { | |

| frameMediaImage = image.image | |

| if ( frameMediaImage != null) { | |

| val inputImage = InputImage.fromMediaImage( frameMediaImage , image.imageInfo.rotationDegrees ) | |

| segmenter.process( inputImage ) | |

| .addOnSuccessListener { segmentationMask -> | |

| val mask = segmentationMask.buffer | |

| val maskWidth = segmentationMask.width | |

| val maskHeight = segmentationMask.height | |

| mask.rewind() | |

| val bitmap = Bitmap.createBitmap( maskWidth , maskHeight , Bitmap.Config.ARGB_8888 ) | |

| bitmap.copyPixelsFromBuffer( mask ) | |

| // Pass the segmentation bitmap to drawingOverlay | |

| drawingOverlay.maskBitmap = bitmap | |

| drawingOverlay.invalidate() | |

| } | |

| .addOnFailureListener { exception -> | |

| Log.e( "App" , exception.message!! ) | |

| } | |

| .addOnCompleteListener { | |

| image.close() | |

| } | |

| } | |

| } | |

| } |

In the above code snippet, we convert segmentationMask which is a ByteBuffer to bitmap. Finally, we assign the value of bitmap to the maskBitmap variable present in DrawingOverlay . We will also call drawingOverlay.invalidate() to refresh the overlay. This calls the onDraw in the DrawingOverlay class, were we will display the segmentation Bitmap to the user in a later section.

This connects the live camera feed to the DrawingOverlay with the help of FrameAnalyser . One last thing, we need to attach FrameAnalyser with Camera in MainActivity.kt ,

| class MainActivity : AppCompatActivity() { | |

| ... | |

| private lateinit var frameAnalyser : FrameAnalyser | |

| override fun onCreate(savedInstanceState: Bundle?) { | |

| super.onCreate(savedInstanceState) | |

| ... | |

| drawingOverlay = findViewById( R.id.camera_drawing_overlay ) | |

| ... | |

| frameAnalyser = FrameAnalyser( drawingOverlay ) | |

| ... | |

| } | |

| private fun setupCameraProvider() { | |

| ... | |

| } | |

| private fun bindPreview(cameraProvider: ProcessCameraProvider) { | |

| ... | |

| // Attach the frameAnalyser here ... | |

| imageAnalysis.setAnalyzer( Executors.newSingleThreadExecutor() , frameAnalyser ) | |

| cameraProvider.bindToLifecycle( | |

| (this as LifecycleOwner), | |

| cameraSelector, | |

| imageAnalysis, | |

| preview | |

| ) | |

| } | |

| } |

6. 📝 Drawing the Segmentation Bitmap on the DrawingOverlay

As we saw in the implementation of the DrawingOverlay class, there’s a variable maskBitmap which holds the segmentation bitmap for the current frame. Our goal is to draw this Bitmap onto the screen. So, we call canvas.drawBitmap in the onDraw method of our DrawingOverlay ,

Also, note that we need to use flipBitmap method as we’ll obtain a mirror image of the segmentation.

That’s all, we’re done! Run the app on a physical device and see the magic happen right in front of your eyes!

We’re done

Hope you loved MLKit’s Segmentation API. For any suggestions & queries, feel free to write a message on equipintelligence@gmail.com ( including the story’s link/title ). Keep reading, keep learning and have a nice day ahead!