Hey! It’s been a while since my last post, but the time has come to share another story of Architectural components we built at Sync. with the Android team. This one is about cache.

Brief context overview

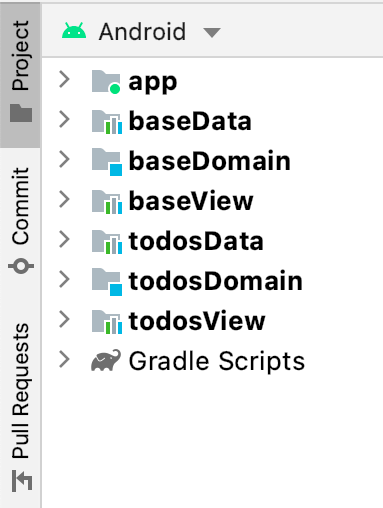

If you read my previous post about Jetpack Navigation you may remember the Android team guidelines we aim to follow whenever possible:

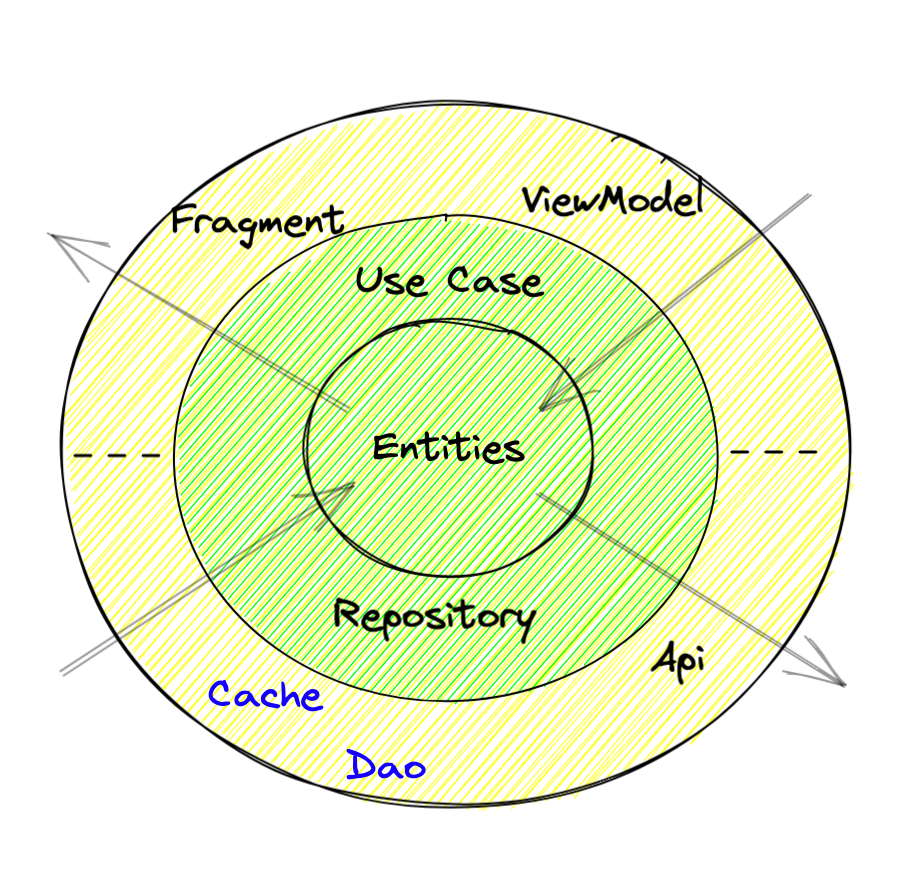

- We must follow Clean Architecture Design

- We agreed to go with a multi-modular project

- Try to follow Android suggested best practices

Let me also remind the structure we have at Sync. for the Android project:

- Kotlin Base domain module to place domain abstractions.

- Feature domain Kotlin modules.

- Base view module Android library to place UI-related architectural abstractions.

- Feature view Android modules.

- App module, where we join all together and build the final app.

- A Single API module, containing all related code for our API. We are planning to split this by feature as well.

So when we started analysing the new requirement about “enabling offline availability all over the app”, we quickly agreed to go with Jetpack Room library as it seemed to meet all of the team guidelines. That was an easy choice.

Real analysis and architectural discussion started when we asked ourselves how are we going to enable such support on our existing app?.

Building up the strategy

We spent some days doing the proper analysis of our current status to come up with an implementation strategy. Initial requirements were:

- Try to minimise refactor to be done (if possible).

- Stepped migration (enable caches one by one)

- Have a new data source per repository to manage cache. This applies only to those repositories who require it.

- Keep responsibilities where they belong, as now a database concept is added and we don’t want to merge that with the domain layer.

- Manage the “single source of truth” concept. This means the repository now becomes the unique source of truth no matter if has one or more data sources.

- Follow the recommended way of cache maintenance.

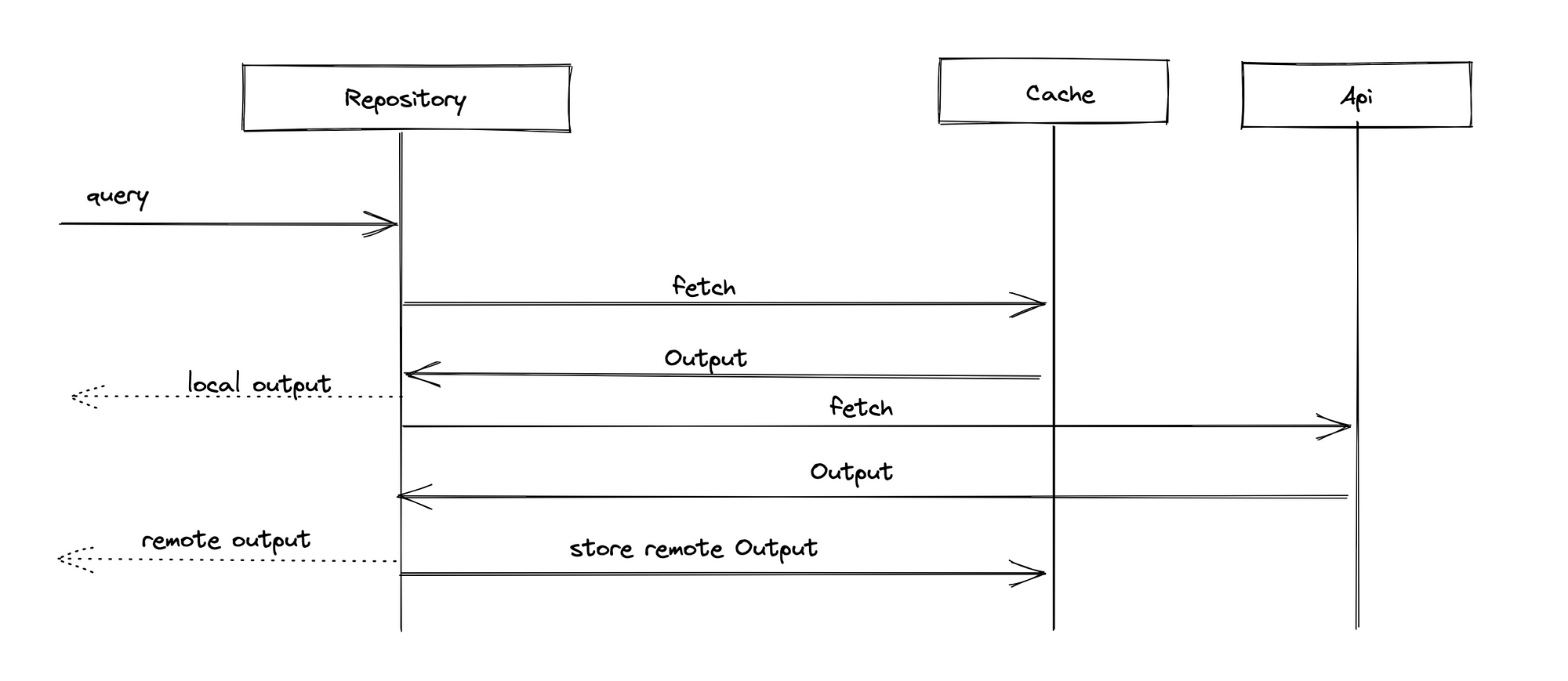

When we speak about cache maintenance there are several ways to build and keep track of a cache. For this proposal we aimed to go with the simplest flow we knew for doing so.

Steps to follow, when a repository runs a query, are:

- fetch and return local data if any

- Fetch and return remote data if any

- Update local data with remote results if any

During the initial session, we came up with a few topics to take into account for the final solution design. I’m not going to get in deep in all of them today, but just for you to know, those were:

- Database encryption.

- Database migrations.

- Database clean up (when to wipe out data, and how).

- Cache replace strategy (what to do when new content arrives for existing entities).

- Repository fetch strategy (when to pull from cache and remote).

- Flow creation and lifetime. (Who owns the flow and when do we close it).

- Use Case with Flow. (As at that time, Use cases didn’t have to deal with Flows at all).

- Flow handling on ViewModel.

Of course, I can deep dive into each of those topics and write about them… but why not just present the solution we came up with.

If you can’t wait for the full explanation, I updated the ToDo demo application from my previous article with all these implementation steps.

Working with Cached Resources

Hardest work for us was to find an abstraction good enough to implement the cache maintenance steps in a generic way while being flexible enough to work with most of our cache candidate domain models.

Inspired by Dropbox Store Library (which I highly recommend if it fits your needs) we aimed to build something similar to their Stores, but with a much smaller scope, as we have some predefined rules to build our cache). So let me introduce you our Resource.

| class Resource<in Input, out Output>( | |

| private val remoteFetch: suspend (Input) -> Output?, | |

| private val localFetch: suspend (Input) -> Output?, | |

| private val localStore: suspend (Output) -> Unit, | |

| private val localDelete: suspend () -> Unit, | |

| private val refreshControl: RefreshControl = RefreshControl() | |

| ) : RefreshControl.Listener, ITimeLimitedResource by refreshControl { | |

| init { refreshControl.addListener(this) } | |

| // Public API | |

| suspend fun query(args: Input, force: Boolean = false): Flow<Output?> = flow { | |

| if (!force) { | |

| fetchFromLocal(args)?.run { emit(this) } | |

| } | |

| if (refreshControl.isExpired() || force) { | |

| fetchFromRemote(args).run { emit(this) } | |

| } | |

| } | |

| override suspend fun cleanup() { | |

| deleteLocal() | |

| } | |

| // Private API | |

| private suspend fun deleteLocal() = kotlin.runCatching { | |

| localDelete() | |

| }.getOrNull() | |

| private suspend fun fetchFromLocal(args: Input) = kotlin.runCatching { | |

| localFetch(args) | |

| }.getOrNull() | |

| private suspend fun fetchFromRemote(args: Input) = kotlin.runCatching { | |

| remoteFetch(args) | |

| }.getOrThrow()?.also { | |

| kotlin.runCatching { | |

| localStore(it) | |

| refreshControl.refresh() | |

| } | |

| } | |

| } |

Following Dropbox’s pattern, we opted for lambda functions to decouple data sources from the resource itself. To comply with the maintenance rules, when building a Resource<Input, Output>we require:

localFetch(i: Input): Output?to query your local data sourceremoteFetch(i: Input): Output?to query your remote data sourcelocalStore(o: Output)to store remote results

In addition, we also require a localDelete() to wipe out the local store if required.

The above graph aims to represent the default flow that ourResource follows to deliver a Flow<Output> result. Initial premise was to fetch from cache, fetch from remote, and update cache after delivering results on every query to a Resource.

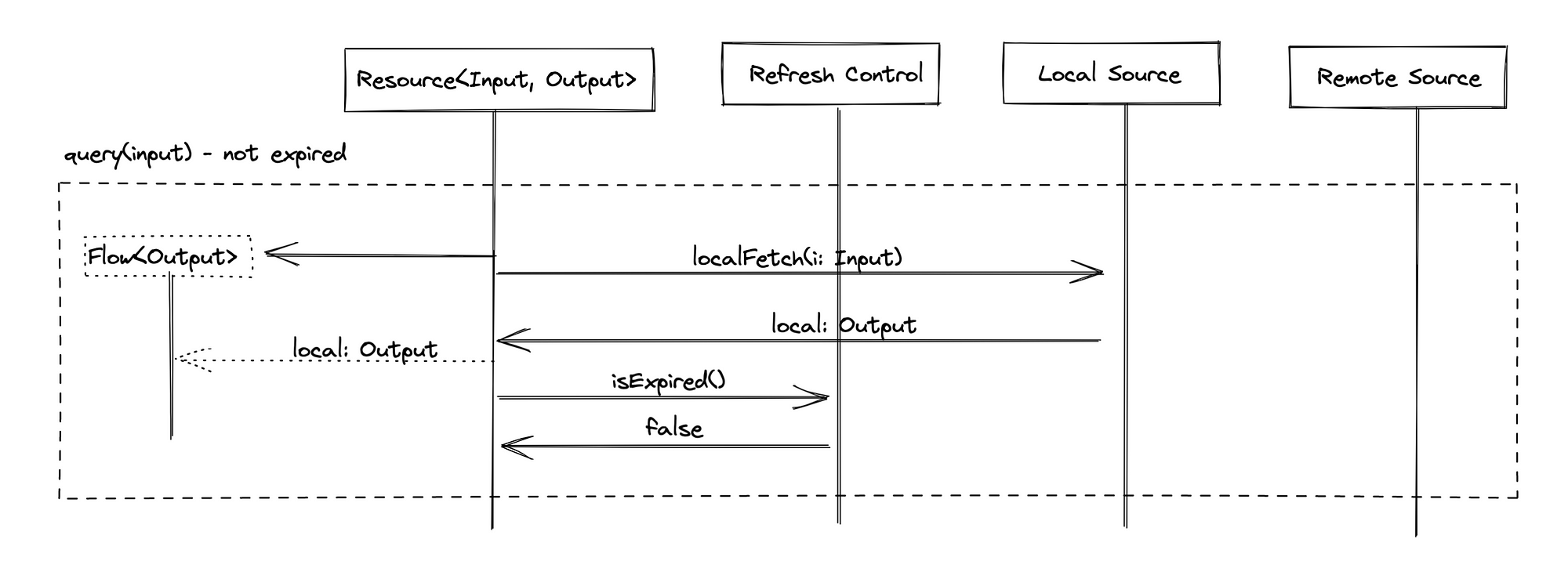

But we tried to improve a bit the experience and just rely on cache without querying the API whenever possible. So we included a RefreshControl attached to the resource to control cache validity time frame by using a timestamp.

| package com.example.todo.base.data | |

| import java.util.* | |

| import java.util.concurrent.TimeUnit | |

| class RefreshControl( | |

| rate: Long = DEFAULT_REFRESH_RATE_MS, | |

| private var lastUpdateDate: Date? = null | |

| ) : ITimeLimitedResource { | |

| companion object { | |

| val DEFAULT_REFRESH_RATE_MS = TimeUnit.MINUTES.toMillis(5) | |

| } | |

| interface Listener { | |

| suspend fun cleanup() | |

| } | |

| private val listeners: MutableList<Listener> = mutableListOf() | |

| private val children: MutableList<RefreshControl> = mutableListOf() | |

| // ITimeLimitedResource | |

| override var refreshRate: Long = rate | |

| override val lastUpdate: Date? | |

| get() = lastUpdateDate | |

| override suspend fun evict(cleanup: Boolean) { | |

| lastUpdateDate = null | |

| children.forEach { it.evict(cleanup) } | |

| if (cleanup) { | |

| listeners.forEach { it.cleanup() } | |

| } | |

| } | |

| // Public API | |

| fun createChild(): RefreshControl = | |

| RefreshControl(refreshRate, lastUpdateDate).also { children.add(it) } | |

| fun addListener(listener: Listener) { | |

| listeners.add(listener) | |

| } | |

| fun refresh() { | |

| lastUpdateDate = Date() | |

| } | |

| fun isExpired() = lastUpdateDate?.let { (Date().time - it.time) > refreshRate } ?: true | |

| } |

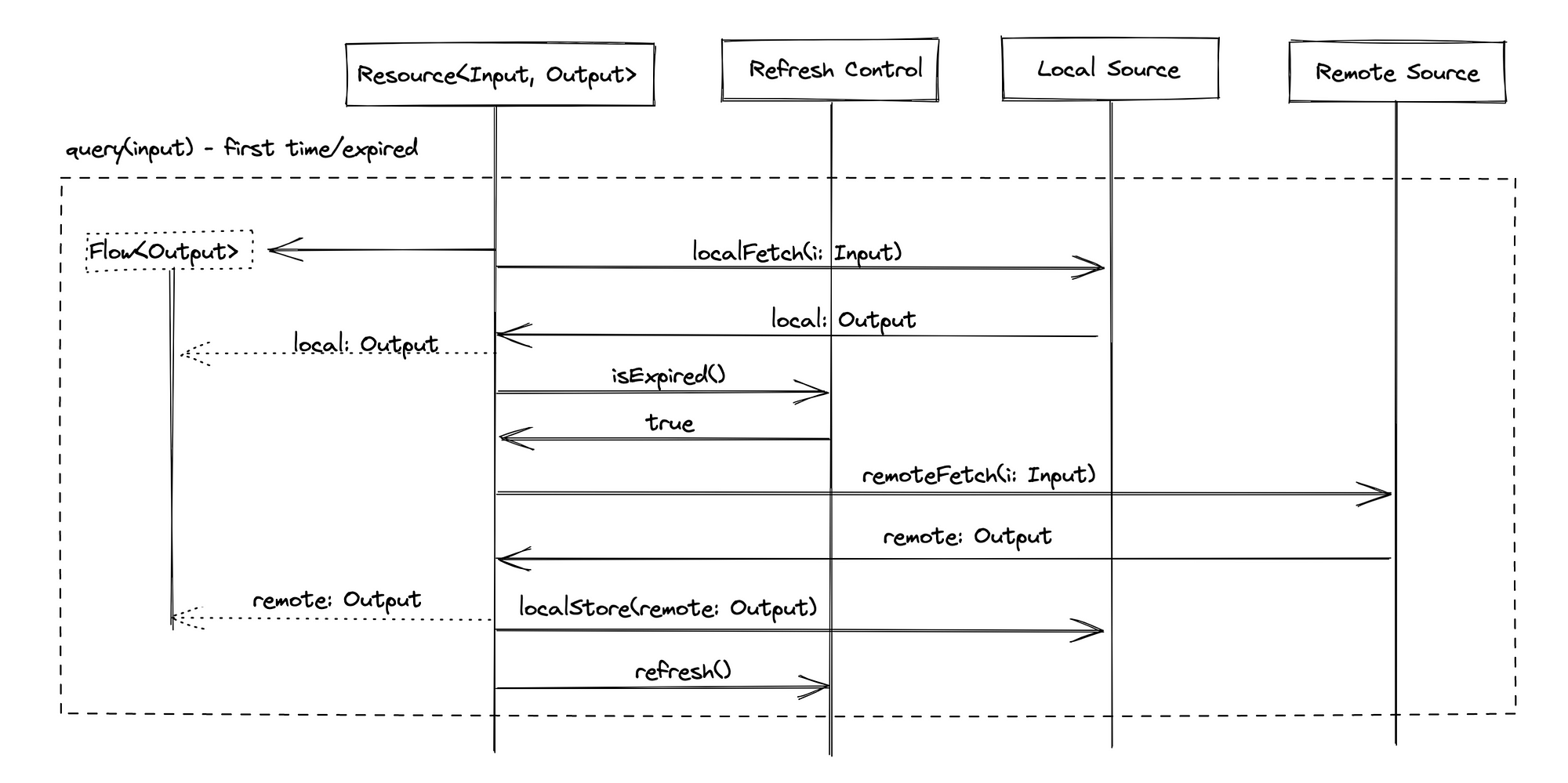

With this RefreshControl attached to a Resource now we can identify the 3 possible scenarios:

Query with valid cache

When RefreshControl holds a valid timestamp (older than the one on query time), Resource will only rely on the local data source to deliver results. That’s because we consider timestamp has been stored recently enough to trust cached data. But why do we do this? Let’s check the next scenario.

Query with empty/expired cache

When RefreshControl holds an invalid timestamp, the flow changes a bit. We still deliver cached resources from our local data source and, in addition, we also query the remote data source and use its results to update the local one before delivering them. At this moment we also update the RefreshControl timestamp, tagging last queried time for further checks. As RefreshControl initialises with an expiration time, we are able to know when cache will expire by using both timestamp and expiration time.

This scenario also applies for the initial query as RefreshControl persists the timestamp in memory, and when the app initialises, timestamps are missing for every RefreshControl. We did it on purpose to always fetch remote data source on the first query.

Force refresh

As cache might be invalid due to external events that the app might not be aware of, we wanted to allow the user to refresh cached content manually (i.e. by pulling to refresh a list). To allow this kind of “forced” event we introduced the force flag on the query call to fetch content directly from remote source to get latest data. As before, cache will be updated with the resultant content.

And how exactly do we handle the internal or known app events?

Manual cleanup

By the use of evict(), we added the possibility to manually clear the stored timestamp from the RefreshControl. By doing this, next query() call to the Resource will trigger the remote fetch as it will be treated as expired.

We also faced some scenarios when we had to clear more than one related Resource at same time. To avoid multiple calls, we instead allowed building a tree-map of controls to build those relations. A RefreshControl is able to create children linked to it, so when evict() was called in the parent control, all of their children will get the same signal.

Last but not least, we added the possibility to completely erase the cached data as well. We just need to enable the cleanup flag when sending the signal, by calling evict(true).

Some migration guidelines

As we are now aware of how a Resource work, we are ready to migrate our Repository to work with them. We found out along the way that steps were almost the same for every repository migration:

Add the local data source

As mentioned above, we usually need a local data source capable of fetching, storing, and deleting domain models. So this step is about creating a new interface for the local data source implementation. Ideally, we will inject it into the Repository later on.

| interface ITodoCache { | |

| // required for List<Todo> output | |

| suspend fun getAllTodos(): List<Todo>? | |

| suspend fun storeAllTodos(todos: List<Todo>) | |

| suspend fun deleteAllTodos() | |

| // required for Todo output | |

| suspend fun getTodoById(id: Long): Todo? | |

| suspend fun storeTodo(todo: Todo) | |

| } |

Create the Resource instance

With both remote and local data sources in place in the Repository, we are now ready to create the related Resource<Input, Output>. Some things to care about are:

- Which

RefreshControlto attach. - The refresh rate.

- The required query parameters (or

Input). - The related domain model (or

Output).

| class TodoRepository( | |

| private val localSource: ITodoCache, // Cache injection | |

| private val remoteSource: ITodoApi | |

| ) { | |

| // new resource to query List<Todo> | |

| private val todoResource = Resource<Unit, List<Todo>>( | |

| { remoteSource.getAllTodos() }, | |

| { localSource.getAllTodos() }, | |

| localSource::storeAllTodos, | |

| localSource::deleteAllTodos, | |

| // detached RefreshControl with 5 mins rate | |

| RefrehsControl(TimeUnit.MINUTES.toMillis(5)) | |

| ) | |

| //... | |

| } |

Migrate queries to use Resource

As the name says, this step is about updating the related query calls to our remote source, to rely on the newly created Resource. This means we are now going to deliver a Flow<Ouput> instead of Output, but we will care about this later on in this article.

| class TodoRepository( | |

| private val localSource: ITodoCache, // Cache injection | |

| private val remoteSource: ITodoApi | |

| ) { | |

| // new resource to query List<Todo> | |

| private val todoResource: Resource<Unit, List<Todo>> | |

| // migrate call to use resource | |

| // before was like: remoteSource.getAllTodos() | |

| suspend fun getAllTodos(force: Boolean): Flow<List<Todo>?> = | |

| resourceGroup.query(Unit, force) | |

| } |

Look for update calls to evict the Resource

Final step is to recognise all the places where our cache gets invalid. More or less this is about looking at every add/delete/update call to the related resource API and call the evict function after such action has been done. One decision we took is to evict the Resource regardless of the API call result (even if failure) to ensure we always display the latest info.

| class TodoRepository( | |

| private val localSource: ITodoCache, // Cache injection | |

| private val remoteSource: ITodoApi | |

| ) { | |

| // new resource to query List<Todo> | |

| private val todoResource: Resource<Unit, List<Todo>> | |

| // call evict after every resource update attempt | |

| suspend fun addTodo(todo: Todo): Boolean? = | |

| remoteSource.addTodo(todo).also { resourceGroup.evict() } | |

| suspend fun deleteTodo(todo: Todo): Boolean? = | |

| remoteSource.deleteTodo(todo).also { resourceGroup.evict() } | |

| suspend fun updateTodo(todo: Todo, update: Todo): Boolean? = | |

| remoteSource.updateTodo(todo, update).also { resourceGroup.evict() } | |

| } |

As our repositories are now ready to work with cached resources, let’s move on and talk about the local data source.

Building a Data Layer

Was easy for us to tell that we had to create a new data layer to maintain all related cache resources, as at that moment there was not an existing place that fits their specs. So we came up with:

A Base Data Android module to keep all the cache and database common abstractions:

CacheAbstract classBaseDaogeneric interface containing all base SQL methods.

Feature Data Android modules to build related feature data classes. Each of these follows the same structure:

FeatureEntitydata class to represent a database table. Related 1 to 1 to a specific domain model most of the time.

| @Entity(tableName = "todo") | |

| data class TodoEntity( | |

| @PrimaryKey val uuid: Long, | |

| val value: String, | |

| val body: String, | |

| val completed: Boolean, | |

| ) |

FeatureDaospecification forFeatureEntity. Usually defining base functions for query, replace and delete all elements.

| @Dao | |

| interface TodoDao : BaseDao<TodoEntity> { | |

| @Query("SELECT * from todo") | |

| suspend fun getAll(): Array<TodoEntity> | |

| @Query("SELECT * from todo WHERE :uuid = uuid") | |

| suspend fun getById(uuid: Long): TodoEntity? | |

| @Query("DELETE from todo") | |

| suspend fun deleteAll() | |

| } | |

| @Transaction | |

| suspend fun TodoDao.replaceAll(vararg todos: TodoEntity) { | |

| deleteAll() | |

| insert(*todos) | |

| } |

Job Offers

FeatureMappingsextensions to define mappings between aFeatureEntityand its related domain model(s).

| fun Todo.toEntity() = TodoEntity( | |

| uuid, value, body, completed | |

| ) | |

| fun TodoEntity.toDomain() = Todo( | |

| value, body, completed, uuid | |

| ) |

FeatureCacheimplementation. Yet again, defining functions for query, replace and delete all elements.

| class TodosCache( | |

| private val dao: TodoDao, | |

| exceptionHandler: IExceptionHandler | |

| ) : Cache(exceptionHandler), ITodoCache { | |

| companion object { | |

| private const val tag = "LOCAL-SOURCE" | |

| } | |

| override suspend fun getAllTodos() = | |

| runQuery { dao.getAll().map { it.toDomain() } } | |

| override suspend fun getTodoById(id: Long) = | |

| runQuery { dao.getById(id)?.toDomain() } | |

| override suspend fun storeAllTodos(todos: List<Todo>) { | |

| runQuery { | |

| todos | |

| .map(Todo::toEntity) | |

| .let { dao.replaceAll(*it.toTypedArray()) } | |

| } | |

| } | |

| override suspend fun storeTodo(todo: Todo) { | |

| runQuery { dao.insert(todo.toEntity()) } | |

| } | |

| override suspend fun deleteAllTodos() { | |

| runQuery { dao.deleteAll() } | |

| } | |

| } |

FeatureDataModulefor dependency injection.

A Data Package inside the App module to keep the Database class and related factories, as we require all feature modules to build the database. We also have to provide all Dao injections from this module as Database will be the provider object.

Remember we are using database for caching only, so wiping out the data is not really a big deal for us. That’s why we picked some of those decisions.

Nice! we have our Database in place, so now what?

Moving to consume Flows in the UI

As we now have in place a Resource to query inside the Repository that also has access to a local data source, such Repository is now able to return a Flow of results.

But in our current architecture, we access a Repository by its related UseCase, so we encapsulate the business logic inside it, and deliver back the response wrapped in a Result<O> to handle the different result kinds (i.e.: error, success).

As we now need to support a resultant Flow we have to solve the main related question: whether deliver back a Result<Flow> or Flow<Result> .

Result Flow vs Flow of results

Inspired this time by Google I/O’s app, we included a FlowUseCase to:

- Encapsulate the

Flowerror handling - Assign the proper coroutine scope

- Deliver

Flow<Result>

| abstract class FlowUseCase<in TParam, out TResult>( | |

| private val exceptionHandler: IExceptionHandler, | |

| private val dispatcher: CoroutineDispatcher | |

| ) { | |

| @ExperimentalCoroutinesApi | |

| @Suppress("TooGenericExceptionCaught") | |

| suspend operator fun invoke(param: TParam) = | |

| performAction(param) | |

| .catch { exception -> | |

| exceptionHandler.handle(exception) | |

| emit(Result.Failure(exception)) | |

| } | |

| .flowOn(dispatcher) | |

| protected abstract suspend fun performAction(param: TParam): Flow<Result<TResult>> | |

| } |

As base UseCase was already wrapping resultant info inside a Result object, we choose to deliver a Flow<Result> back so, as we enable cache for the existing features, we can reuse the same Result handling logic inside ViewModels, leaving us to just deal with the Flow itself.

Collecting Results

As Flow reaches the ViewModel we had to think about 3main topics:

Flowresult handling

As mentioned before, for the existing scenarios we did have in place the required logic to deal with Result object. So the easiest thing to do for a Flow<Result> was to collect() it and iterate for every Result same way as before.

| viewModelScope.launch { | |

| getAllTodosUseCase(force) | |

| .collect { response -> | |

| (response as? Result.Success)?.let { _todos.value = it.result } | |

| } | |

| } |

- Loading feedback

When we spoke about Resource, we mentioned resultant Flow is being closed after remote info is delivered. This limited time frame was made on purpose so we can enable our loading UI as long as the requested Flow lives.

| viewModelScope.launch { | |

| getAllTodosUseCase(force) | |

| .onStart { _refreshing.value = true } | |

| .onCompletion { _refreshing.value = false } | |

| .collect { response -> | |

| (response as? Result.Success)?.let { _todos.value = it.result } | |

| } | |

| } |

- Error handling

As logic to deal with Result already exists in our code, we had this one covered already. The only required thing to do is to deal with an error Result scenario inside the collect function.

| viewModelScope.launch { | |

| getAllTodosUseCase(force) | |

| .onStart { _refreshing.value = true } | |

| .onCompletion { _refreshing.value = false } | |

| .collect { response -> | |

| when (response) { | |

| is Result.Success -> _todos.value = response.result | |

| is Result.Failure -> _error.value = response.error | |

| } | |

| } | |

| } |

Dealing with forced refresh

As we are now working with cache, the UI now needs to enable some way for the user to manually refresh the cached data. Resource is already prepared to deal with this, but UI needs some elements to enable this feature.

For most of our views, we opted to include the “pull to refresh” component as already handles the loading UI as well. The only thing to do is to include this element in the UI and send the force flag back to the Resource when triggered.

| <androidx.swiperefreshlayout.widget.SwipeRefreshLayout | |

| android:id="@+id/swipe_refresh" | |

| android:layout_width="match_parent" | |

| android:layout_height="match_parent" | |

| app:layout_behavior="@string/appbar_scrolling_view_behavior"> | |

| <androidx.recyclerview.widget.RecyclerView | |

| android:id="@+id/todo_list" | |

| android:layout_width="match_parent" | |

| android:layout_height="match_parent" | |

| android:orientation="vertical" | |

| app:layoutManager="androidx.recyclerview.widget.LinearLayoutManager" | |

| tools:listitem="@layout/list_item_todo" /> | |

| </androidx.swiperefreshlayout.widget.SwipeRefreshLayout> |

| @AndroidEntryPoint | |

| class TodoListFragment : StackFragment() { | |

| override fun onCreateView( | |

| inflater: LayoutInflater, container: ViewGroup?, | |

| savedInstanceState: Bundle? | |

| ): View? { | |

| bindings = FragmentTodoListBinding.inflate(inflater, container, false) | |

| bindings.viewModel = viewModel | |

| bindings.lifecycleOwner = viewLifecycleOwner | |

| bindings.todoList.adapter = adapter | |

| // Attach refresh listener | |

| with(bindings.swipeRefresh) { | |

| setOnRefreshListener(viewModel) | |

| } | |

| return bindings.root | |

| } | |

| private fun observeViewModel() { | |

| viewModel.todos.observe(viewLifecycleOwner) { | |

| adapter.submitList(it) | |

| } | |

| // Enable loading spinner | |

| viewModel.refreshing.observe(viewLifecycleOwner) { | |

| bindings.swipeRefresh.isRefreshing = it | |

| } | |

| } | |

| } |

| @HiltViewModel | |

| class TodoListViewModel( | |

| private val getAllTodosUseCase: GetAllTodosUseCase | |

| ) : ViewModel(), SwipeRefreshLayout.OnRefreshListener { | |

| private val _todos = MutableLiveData<List<Todo>>() | |

| val todos: LiveData<List<Todo>> | |

| get() = _todos | |

| private val _refreshing = MutableLiveData<Boolean>(false) | |

| val refreshing: LiveData<Boolean> | |

| get() = _refreshing | |

| // initial fetch triggered onViewCreated | |

| fun onViewCreated() { | |

| refresh() | |

| } | |

| // pull to refresh provided by SwipeRefreshLayout.OnRefreshListener | |

| override fun onRefresh() { | |

| refresh(true) | |

| } | |

| private fun refresh(force: Boolean = false) { | |

| viewModelScope.launch { | |

| getAllTodosUseCase(force) | |

| .onStart { _refreshing.value = true } | |

| .onCompletion { _refreshing.value = false } | |

| .collect { response -> | |

| when (response) { | |

| is Result.Success -> _todos.value = response.result | |

| else -> Unit //TODO handle error | |

| } | |

| } | |

| } | |

| } | |

| } |

Answering some questions

When we started with this research, we raised some questions ourselves about how this whole feature was supposed to work. With the solution in place, the time has come for some answers:

Database encryption

Won’t write about this today, but we managed to encrypt the Database with help of SQLCipher and some extra tools.

Database migrations

Room provides a great migration system. But easiest solution for us was to wipe database when version increases, which comes for free with Room.

Database clean up

Room also provides functions to wipe out the data manually. So we just had to find the place for it.

Cache replace strategy

Following Resource cache strategy, the suggested way is always to replace with new content when in conflict. There were some specific cases where we have to wipe data in advance before inserting latest elements, but same strategy is applied.

Repository fetch strategy

The three main scenarios for Resource.query() with the RefreshControl should give you most of this answer. We chose to rely on cache whenever possible by the use of a timestamp to tag last remote query time which, when expired, enables the next remote query call.

Flow creation and lifetime

Resource will create and clear the query-related Flow. This means the resultant Flow lives as long as Resource.query() function call takes to fetch and deliver results from both local and remote data sources.

It was already mentioned we opted for this approach as we had less code to refactor to enable caches in our code.

But it is worth to mention there are different ways to follow here like the “channel” approach allowed by Room, where you can get back a Flow/LiveData from your database, keeping connections opened, so when some changes affect this query, an update will come from it.

Use Case with Flow

FlowUseCase has been created to enable work with Flow for a UseCase. This new abstraction delivers back a Flow<Result> to better isolate each Result handling (and to improve coding speed in our case).

Flow handling on ViewModel

Related to the FlowUseCase and Flow lifetime, we created some guidelines to deal with Flow<Result> in our ViewModel .

Final Thoughts

I cannot tell if this architecture we built is the best one to follow or not, but I can say it has worked so far for us.

After the initial analysis, a single dev spent 2 weeks setting up the proposed abstractions and the implementation for the easiest scenario we considered.

After successfully deploying this, we started working in parallel to implement all remaining use cases (more or less 10 more resources), and implementation time was fast as well for those too. Proposed solution allowed us to gradually deploy cache resources on different releases, and is still easy to maintain while adding new features.

Of course, we found some issues along the way, i.e. how to deal with complex data entities and relations, or advanced queries in Room, and we tried to document such issues and suggested approaches as well.

I can proudly say today the Sync. android app has full offline support today.

Is worth mentioning again that I’ve updated the ToDo demo application from previous article with all these implementation steps. It contains all the setup I presented in this article and some extra support classes and extensions (i.e. ResourceGroup.kt ) in case you want to take a deeper look.

If you have been interested in what we do at some point during this reading, feel free to reach us here. We are always looking for talented people to join us in our journey. You are also welcome to try out the app and send us your feedback as well, will be greatly appreciated!

Thanks for reading again!

Thanks to Rafa Ortega.