In this digital world, we want to stay connected with our friends and family.

The demand for video calling apps is increasing day by day.

we can build apps to stay connected using WebRTC.

The flow of the Article:

- What are WebRTC and PeerJS?

- Basics about WebRTC.

- Developing Video Calling App using Peer.js and WebViews in android.

What are WebRTC and PeerJS?

WebRTC stands for Web Real-Time Communication. WebRTC technology helps you to exchange video, audio, and arbitrary data with peers. WebRTC is supported in Web Browsers and native devices for native devices, you need to add webrtc libraries.

PeerJS is simply a wrapper around the browser’s webrtc implementation and using this we can add video and audio streaming support very easily. It abstracts all the hard tasks which we have to handle. We will be using WebViews for our purpose to call javascript code and rendering HTML.

Basics about WebRTC.

WebRTC works by establishing connections between peer to peer by using a set of protocols.

- Signaling: Signaling process helps set up and control the communication session. Signaling uses SDP(Session Description Protocol) protocol which is in plain text format that contains data about. a) Locations of peers(IP address of the peers). b) media type

- Connecting: In WebRTC, connections happen in a Peer-to-Peer manner not a client-server. It could be tough to connect two peers may be because of incompatibilities. This connection problem can be solved by using ICE, TURN, and STUN servers.

a). ICE stands for Interactive Connectivity Establishment. It helps to find the optimal path to connect two peers so that data transfer can happen optimally.

b). STUN stands for Session Traversal Utilities for NAT. It is a standard method used by NAT traversal. WebRTC client requests the STUN server to get the Public IP address.

c). TURN stands for Traversal Using Relays around NAT. When it is not possible to use STUN. then services have to relay media streams through this server. - Securing Data Exchange: Webrtc ensures security. It encrypts the data which is exchanged between peers. It uses two protocols SRTP and DTLS.

a). SRTP stands for Secure Real-Time Transport Protocol. It is a secure version of RTP. The protocol provides encryption, confidentiality, message authentication, and replay protection to your transmitted audio and video traffic.

b). DTLS stands for Datagram Transport Layer Security. DTLS is a protocol used to secure datagram-based communications. Ordered Delivery is not guaranteed and it has less latency. - Exchanging Data: Using WebRTC we can transfer data, audio, and video streams. We use RTP and RTCP protocols for data transfer.

a). RTP stands for the real-time transport protocol. It helps for real-time transmission of media streams.

b). RTCP stands for real-time control protocol. The messages that can control the transmission and quality of data as well as also allow the recipients so they can send feedback to the source. A protocol designed for this purpose is RTCP.

Developing Video Calling App using Peer.js and WebViews in android.

Let’s start working on it.

Here is the GitHub link.

Step 1: Create a new Android Project.

Step 2: You need to add 4 files to your android project

Here is the link.

This is the place where you have to add files.

VideoCallDemo/app/src/main/assets/

Step 3: You need to add these permissions in Manifest.xml.

<uses-permission android:name="android.permission.INTERNET"/> <uses-permission android:name="android.permission.CAMERA"/> <uses-permission android:name="android.permission.RECORD_AUDIO"/> <uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS"/>

I am using this dependency for handling permissions in jetpack compose.

implementation "com.google.accompanist:accompanist-permissions:0.27.1"

| class MainActivity : ComponentActivity() { | |

| @OptIn(ExperimentalPermissionsApi::class) | |

| override fun onCreate(savedInstanceState: Bundle?) { | |

| super.onCreate(savedInstanceState) | |

| mainActivity = this | |

| setContent { | |

| val permissionState = | |

| rememberMultiplePermissionsState( | |

| listOf( | |

| Manifest.permission.CAMERA, | |

| Manifest.permission.RECORD_AUDIO, | |

| ) | |

| ) | |

| LaunchedEffect(key1 = true) { | |

| permissionState.launchMultiplePermissionRequest() | |

| } | |

| if (permissionState.allPermissionsGranted) { | |

| VideoCallCompose() | |

| } | |

| } | |

| } | |

| } |

Step 4: We will create an XML file to use webView.

| <?xml version="1.0" encoding="utf-8"?> | |

| <androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android" | |

| xmlns:app="http://schemas.android.com/apk/res-auto" | |

| android:layout_width="match_parent" | |

| android:layout_height="match_parent"> | |

| <WebView | |

| android:id="@+id/webView" | |

| android:layout_width="374dp" | |

| android:layout_height="612dp" | |

| android:layout_marginStart="1dp" | |

| android:layout_marginEnd="1dp" | |

| app:layout_constraintBottom_toBottomOf="parent" | |

| app:layout_constraintEnd_toEndOf="parent" | |

| app:layout_constraintHorizontal_bias="0.491" | |

| app:layout_constraintStart_toStartOf="parent" | |

| app:layout_constraintTop_toTopOf="parent" | |

| app:layout_constraintVertical_bias="0.078" /> | |

| </androidx.constraintlayout.widget.ConstraintLayout> |

Now, we will add our XML file to our composable.

| AndroidView(factory = { | |

| val layoutInflater: LayoutInflater = LayoutInflater.from(it) | |

| val view: View = layoutInflater.inflate(R.layout.activity_call, null, false) | |

| view | |

| }, update = { view -> | |

| val webView: WebView = view.findViewById(R.id.webView) | |

| webViewCompose = webView | |

| webView.loadUrl(mUrl) | |

| WebRTCUtil.setupWebView(webView) | |

| }) |

Job Offers

Step 5: Now, create UI for the mic, video, and end call.

| Column(verticalArrangement = Arrangement.Bottom, modifier = Modifier.fillMaxHeight()) { | |

| Row(modifier = Modifier.fillMaxWidth(), horizontalArrangement = Arrangement.Center) { | |

| Image( | |

| modifier = Modifier.clickable { | |

| isAudio = !isAudio | |

| WebRTCUtil.callJavaScriptFunction( | |

| webViewCompose!!, | |

| "javascript:toggleAudio(\"$isAudio\")" | |

| ); | |

| }, | |

| painter = painterResource(id = if (isAudio) R.drawable.btn_mute_normal else R.drawable.btn_unmute_normal), | |

| contentDescription = "audio" | |

| ) | |

| Image( | |

| modifier = Modifier.clickable { | |

| WebRTCUtil.callJavaScriptFunction( | |

| webViewCompose!!, | |

| "javascript:disconnectCall()" | |

| ); | |

| mainActivity.finish() | |

| }, | |

| painter = painterResource(id = R.drawable.btn_endcall_normal), | |

| contentDescription = "end" | |

| ) | |

| Image( | |

| modifier = Modifier.clickable { | |

| isVideo = !isVideo | |

| WebRTCUtil.callJavaScriptFunction( | |

| webViewCompose!!, | |

| "javascript:toggleVideo(\"$isVideo\")" | |

| ); | |

| }, | |

| painter = painterResource(id = if (isVideo) R.drawable.btn_video_muted else R.drawable.btn_video_normal), | |

| contentDescription = "video" | |

| ) | |

| } | |

| } |

Step 6: Now, the VideoCallCompose

| @Composable | |

| fun VideoCallCompose() { | |

| Scaffold( | |

| content = { MyContent() } | |

| ) | |

| } | |

| @Composable | |

| fun MyContent() { | |

| val mUrl = "file:android_asset/call.html" | |

| var isAudio by remember { | |

| mutableStateOf(true) | |

| } | |

| var isVideo by remember { | |

| mutableStateOf(true) | |

| } | |

| var webViewCompose: WebView? by remember { | |

| mutableStateOf(null) | |

| } | |

| Column( | |

| modifier = Modifier.fillMaxSize(), | |

| verticalArrangement = Arrangement.Top, | |

| horizontalAlignment = Alignment.CenterHorizontally | |

| ) { | |

| AndroidView(factory = { | |

| val layoutInflater: LayoutInflater = LayoutInflater.from(it) | |

| val view: View = layoutInflater.inflate(R.layout.activity_call, null, false) | |

| view | |

| }, update = { view -> | |

| val webView: WebView = view.findViewById(R.id.webView) | |

| webViewCompose = webView | |

| webView.loadUrl(mUrl) | |

| WebRTCUtil.setupWebView(webView) | |

| }) | |

| Column(verticalArrangement = Arrangement.Bottom, modifier = Modifier.fillMaxHeight()) { | |

| Row(modifier = Modifier.fillMaxWidth(), horizontalArrangement = Arrangement.Center) { | |

| Image( | |

| modifier = Modifier.clickable { | |

| isAudio = !isAudio | |

| WebRTCUtil.callJavaScriptFunction( | |

| webViewCompose!!, | |

| "javascript:toggleAudio(\"$isAudio\")" | |

| ); | |

| }, | |

| painter = painterResource(id = if (isAudio) R.drawable.btn_mute_normal else R.drawable.btn_unmute_normal), | |

| contentDescription = "audio" | |

| ) | |

| Image( | |

| modifier = Modifier.clickable { | |

| WebRTCUtil.callJavaScriptFunction( | |

| webViewCompose!!, | |

| "javascript:disconnectCall()" | |

| ); | |

| mainActivity.finish() | |

| }, | |

| painter = painterResource(id = R.drawable.btn_endcall_normal), | |

| contentDescription = "end" | |

| ) | |

| Image( | |

| modifier = Modifier.clickable { | |

| isVideo = !isVideo | |

| WebRTCUtil.callJavaScriptFunction( | |

| webViewCompose!!, | |

| "javascript:toggleVideo(\"$isVideo\")" | |

| ); | |

| }, | |

| painter = painterResource(id = if (isVideo) R.drawable.btn_video_muted else R.drawable.btn_video_normal), | |

| contentDescription = "video" | |

| ) | |

| } | |

| } | |

| } | |

| } |

Now, as you can see there is a method we are calling callJavaScriptFunction() using this we will call js methods like toggleAudio, toggleVideo, startCall, and disconnect.

We are loading the call.html file in webView.

You need to pass uniqueId to init() javascript method. you need to call startCall() javascript method and pass the uniqueId of the remote peer for that you need to store the uniqueId in a backend and fetch that id from there.

For simplicity purposes. I will not store it in the backend as the main purpose is to show how to use webView and peer.js in android.

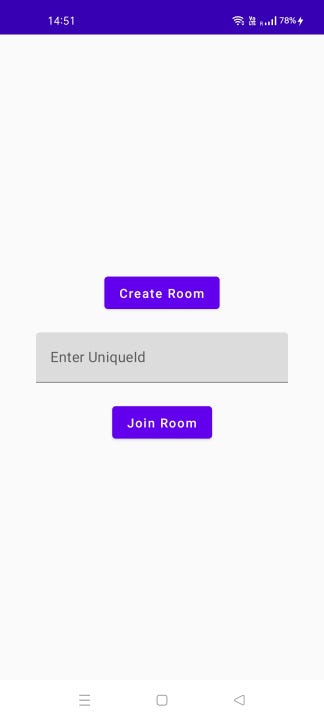

Using the UI.

First, click on Create Room. It will generate a unique Id.

Now, on the second phone in the text field put that peer id and click Join Room.

I have not added any validation in the UI. So try adding the correct id.

Now, Join/Create a Room.

As You can see I have connected with two devices and video calling is working.

Thank You For Reading.

This article was originally published on proandroiddev.com on December 08, 2022