Hi everyone!

My name is Eugene, and I love automated tests. I also happen to be one of the co-authors of Kaspresso, an open source library for writing automated tests for Android applications, author of various articles and talks about tests (Kaspresso: The autotest framework that you have been looking forward to. Part I, Autotests on Android. The entire picture, How to start to writing autotests and not go crazy). My friend Sergei Iartsev (CTO at HintEd) has fallen in love with automated tests as well; in his work, he deals with test automation for various platforms on a daily basis.

In my article, Autotests on Android. The entire picture, I outlined 5 major parts:

- writing tests,

- test runner,

- where to run tests,

- infrastructure,

- other stuff, including test reports, CI/CD integration, and so on.

A couple of years ago (in 2019–2020), writing automated tests was causing us a lot of pain, so we created Kaspresso. Now developers and testers may forget about such problems as flakiness, logs, performance, etc. and concentrate on writing tests using a nice and simple DSL. However, development teams still face problems in other areas surrounding test automation, such as infrastructure, where one has to delve into the magic world of DevOps, or even Highload.

Recently we became interested in how things are with automated tests in different teams. In order to find that out, Sergei and I had interviews with over 30 different teams. The number 30 may seem like a drop in a bucket, but it is still more than 1 or 2 or 5, so we believe our research has enough relevance.

The Research

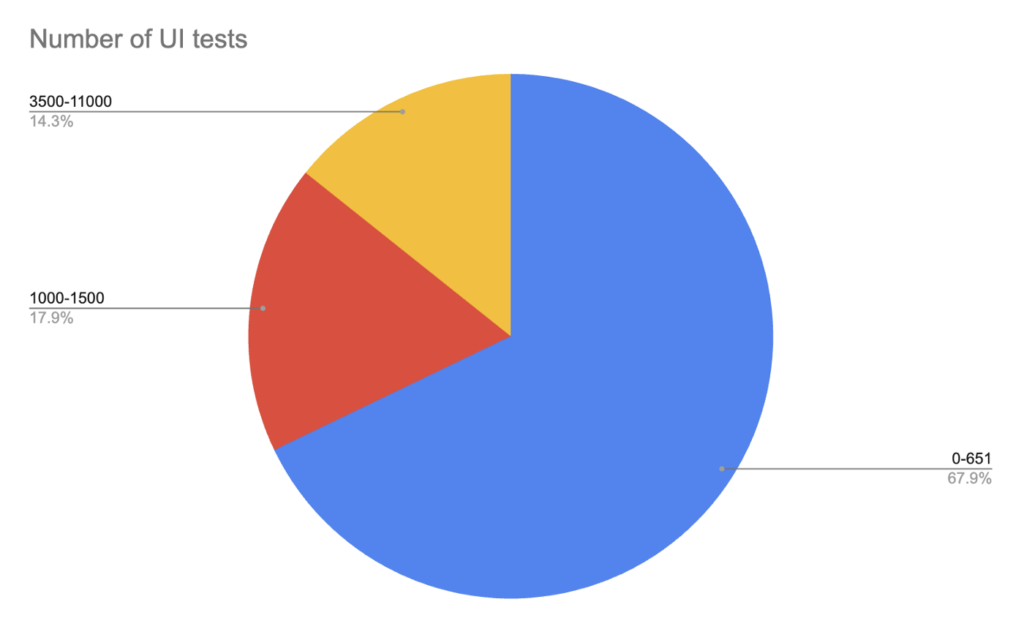

So what teams did we have the pleasure of interviewing? Many of them work for companies based in Russia, while several teams are from European, American and Australian companies: Spotify, Revolut, Badoo, Auto.ru, Sber, HeadHunter and others. They all write UI tests in one form or another, and the number of those tests varies quite a lot:

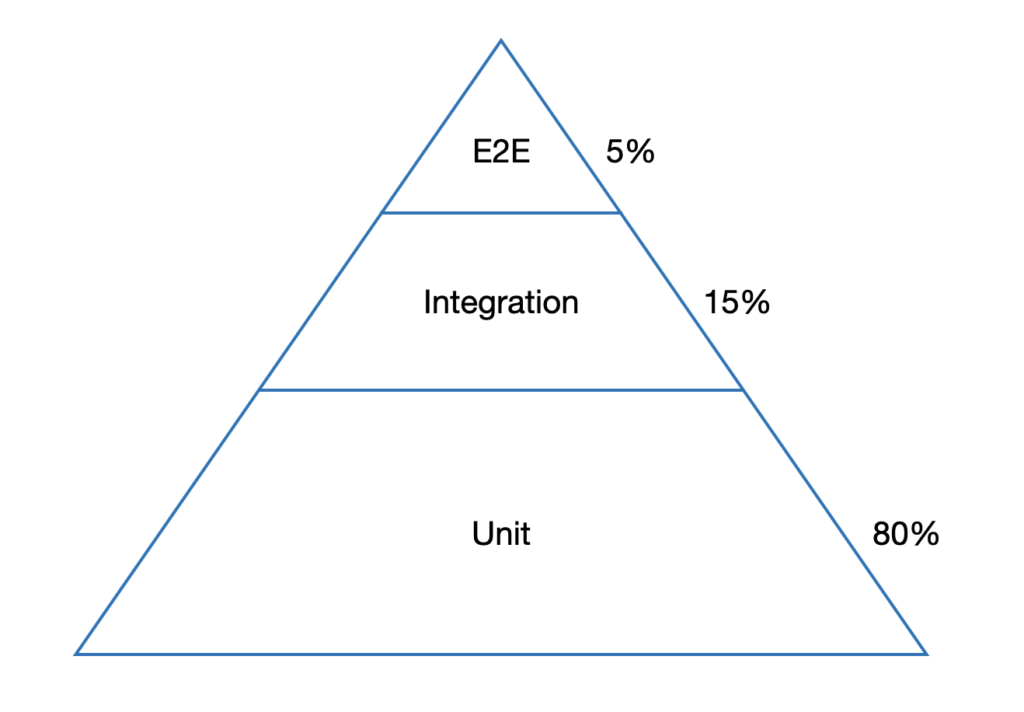

Another point that we paid close attention to was compliance with the test pyramid. Just a quick reminder that in a healthy test pyramid unit tests make up around 80% of the general amount of tests, while E2E tests (or UI tests) are left with a humble 5%.

75% of our respondents follow this concept fully or with minor deviations. In the case with the other 25% something went very wrong: the amount of E2E tests is higher than that of unit tests. But we certainly will not give away any names; this will remain our little secret. 🙂

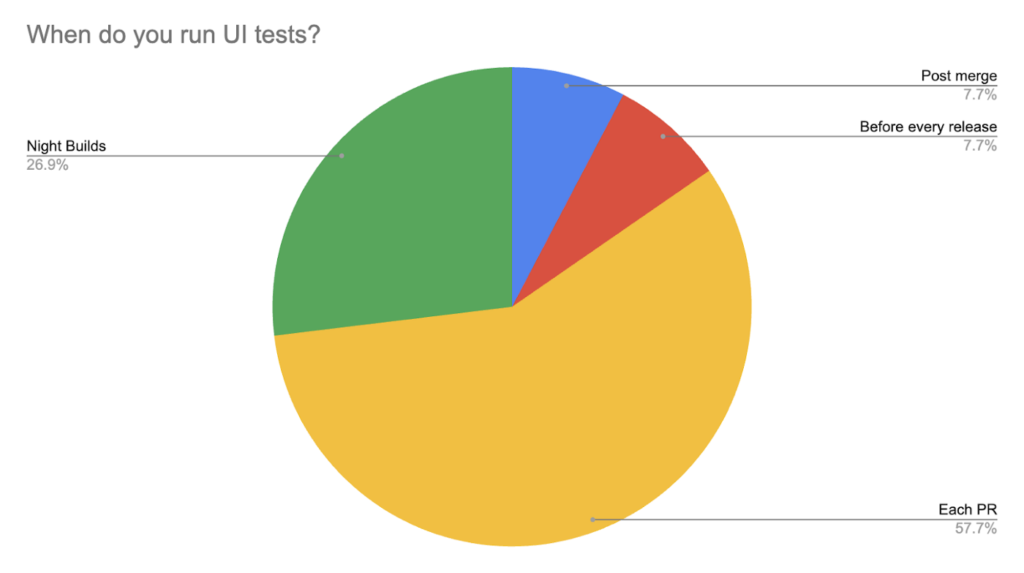

Our next question was about the earliest stage at which UI tests get launched. Mind that it may not be all the tests, but rather some particular suite, or a selection based on impact analysis; some tests may use mocked data. The picture is as follows:

Over a half of our respondents launch their UI tests on pull requests, and another quarter does it on regular night builds. It is evident that the practice of running tests only before release is a thing of the past. It is also important to note that more than a half of our respondents use mocked data in their tests. Experience shows that without using mocks it is quite impossible to provide stability of tests, especially on PRs.

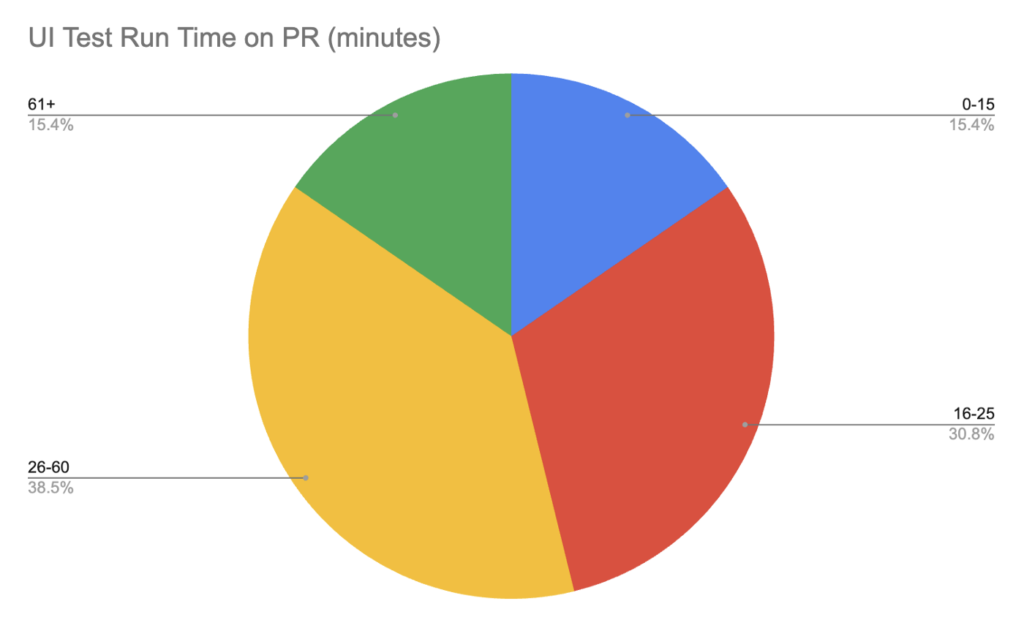

What about the average test run time on a PR? Let us look at the chart below:

We have repeatedly encountered the statement that developers are ready to wait for test run results no more than 15 minutes. Otherwise, they switch to a different task, and the current PR freezes. Unfortunately, we do not have any research on our hands to prove this point. But if we think in terms of this statement, many of the teams have room for improvement. Infrastructure scalability is key. It is important to mention that the time of other test runs (night builds, post-merge) is not as crucial, and they may take up to 2 or 3 hours.

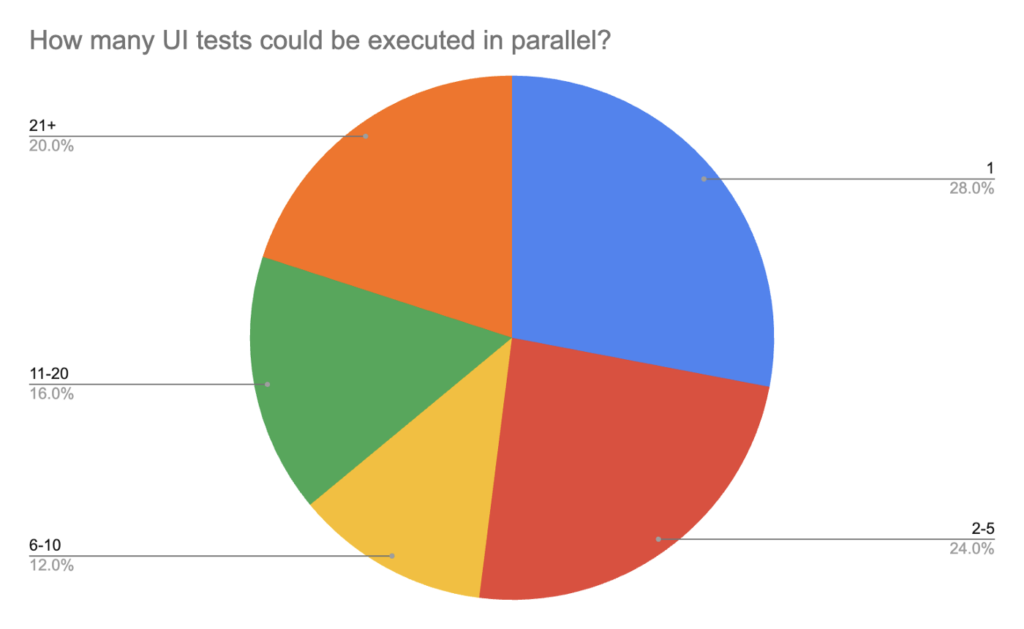

While we are at the topic of scalability, take a look at another chart that shows how many tests our respondents launch in parallel:

About a half do not use more than 5 emulators. Almost all respondents who launch tests in 1 thread do not have test runs on PRs. The reason is clear: it is an issue of infrastructure.

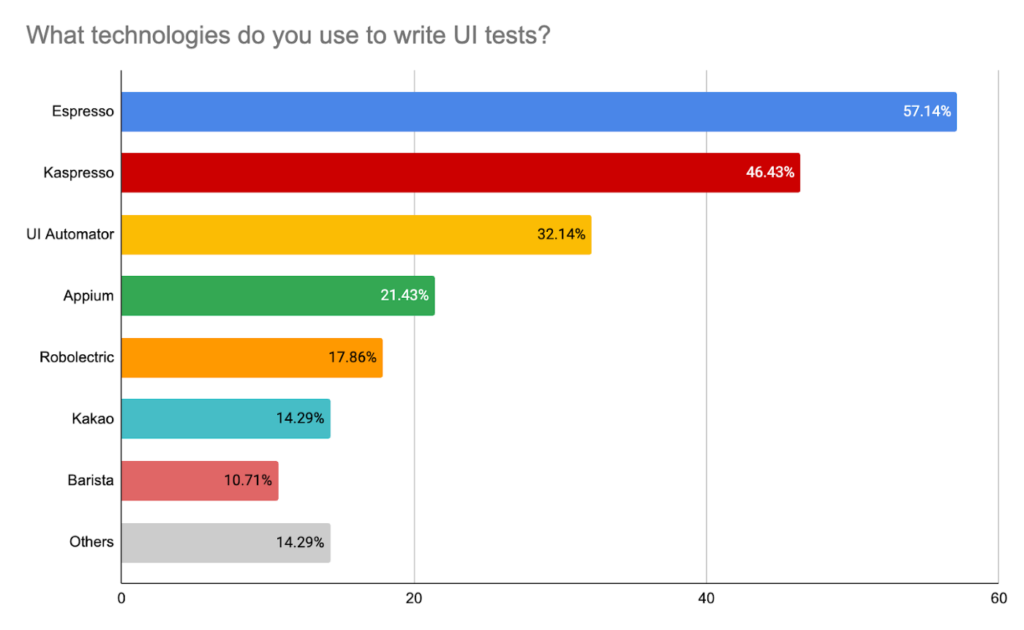

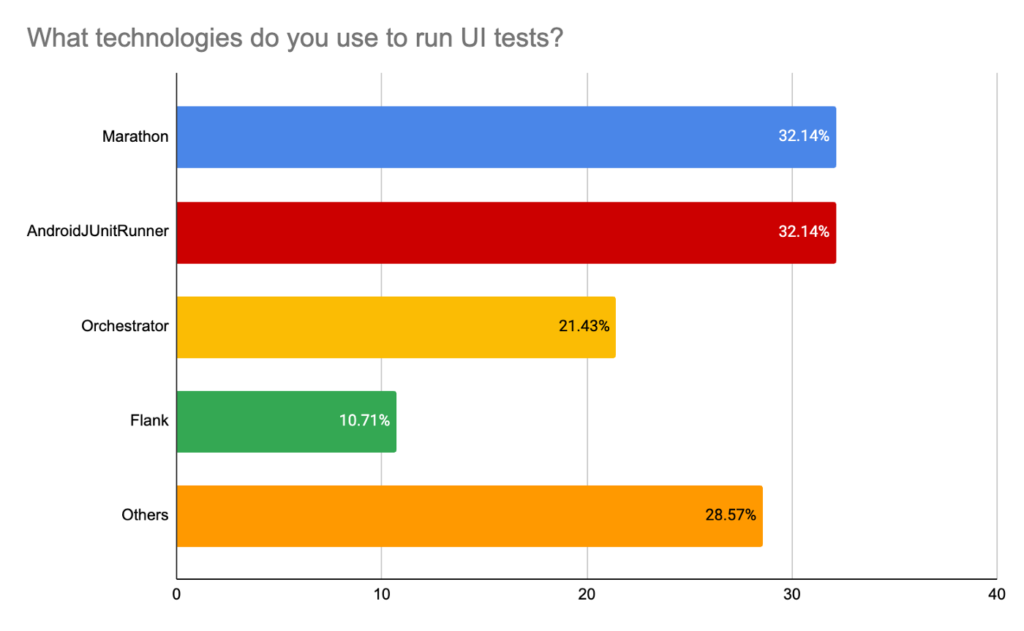

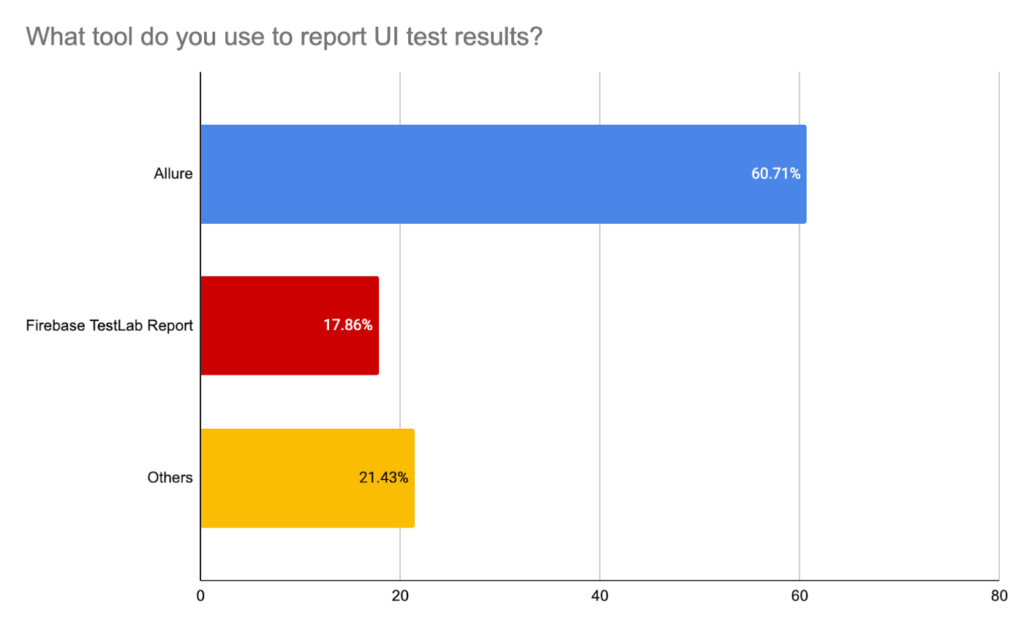

We then asked the teams about the technologies they use for test automation (here our participants could select multiple options):

As can be seen from the survey results, a standard tool set for Android test automation includes Kaspresso (which almost took the first spot!) + Marathon + Allure. Espresso and UI Automator are also very popular, which can be explained by the necessity of customizing the tool to serve one’s particular needs and the existence of some custom solutions based on those native instruments.

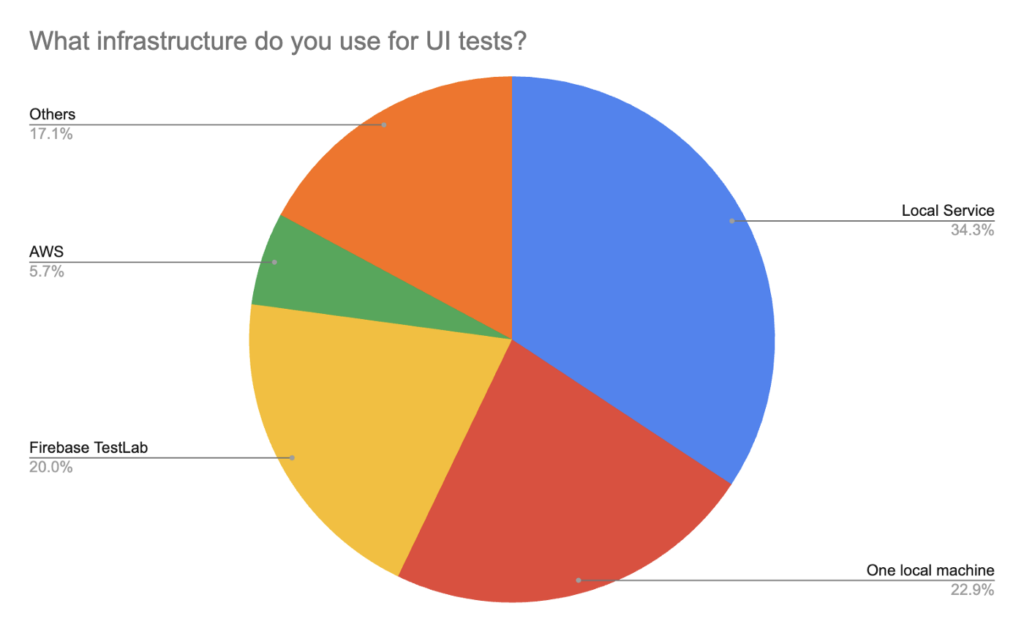

What about the infrastructure? The results here are rather interesting:

Almost a half of our respondents use just one local machine. Local Service is also among the leaders (a number of servers orchestrated by Kubernetes of something similar), as well as Firebase TestLab. Other teams use AWS, Google Cloud, OpenSTF, build agents, etc.

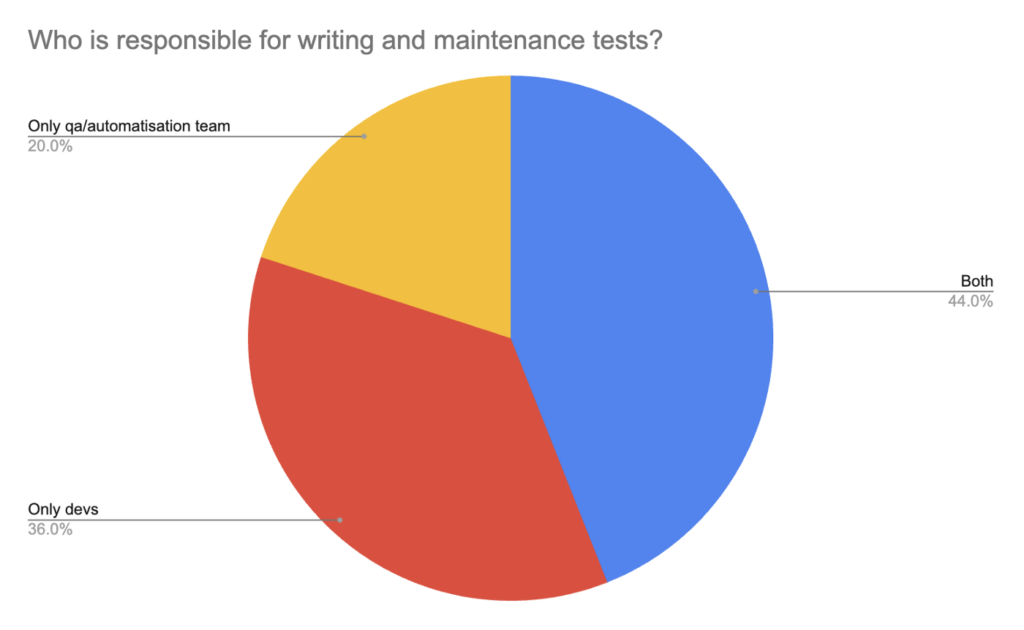

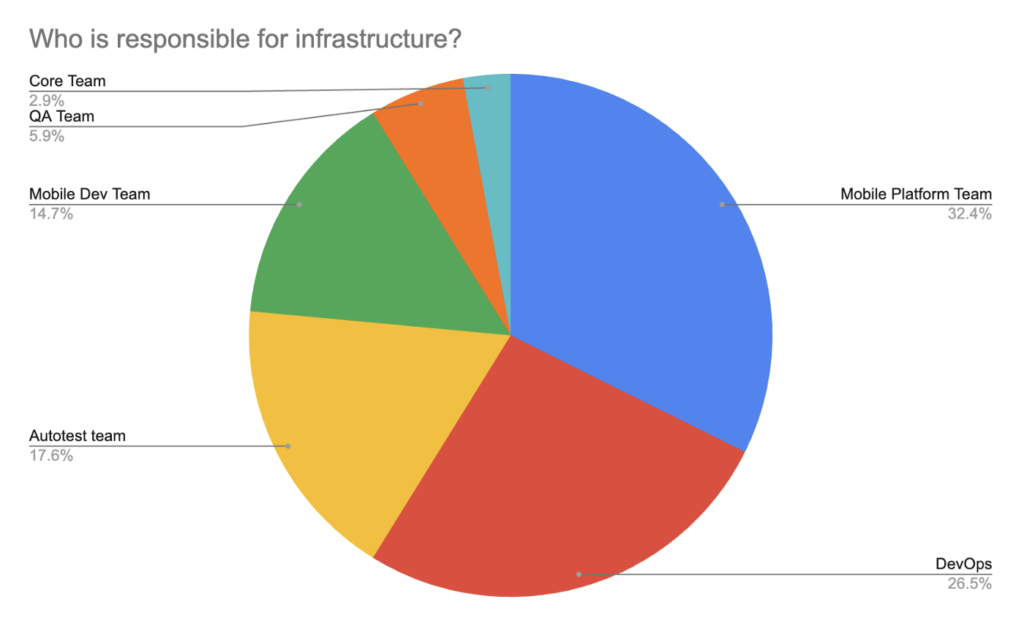

Next in line is the working process, namely, who is responsible for “writing and maintaining tests” and “test infrastructure”:

Note that writing tests is no longer the prerogative of QA or a separate automation team: this approach is clearly outdated. Developers are actively involved in writing UI tests, because tests ensure quality, which is their responsibility as well. This collaborative test writing process helps developers and testers reach better results by accepting common responsibility for product quality.

In their turn, infrastructure-related problems are mainly delegated to a DevOps Team or a Mobile Platform Team.

One of our last questions concerned the tools that teams would like to use in order to improve their automated tests (performance, capabilities, convenience, etc.). Below is their list sorted by popularity:

- Test Validity Gate. A new test is run 5–10 times, sometimes more, with the goal of confirming its stability. In case there is no flakiness, the test is accepted into a test suite.

- Impact Analysis. This analysis helps us not to run all tests in a suite, but only those that are affected by changes in code.

- Network mock/proxy. Mocking and proxying network requests for the application or the whole test device, including such issues as correct recording of mocks and their update. Here we should mention such tools as MockWebServer, MitmProxy, Charles, etc.

- Screenshot diff. The visual difference between a successful and a failed test. It instantly shows the difference in UI.

- Hot Docker images. “Warmed up” Docker images of emulators. These allow to significantly save time at the start. By the way, an app can also be “warmed up” (e.g. the user may be authorized and so on).

- An emphasis on writing module tests, which check only a part of functionality, and not the complete test scenario, avoiding the constant passage of authorization, home screen, etc.

Our Conclusions

In this part of the article, Sergei and I will give our opinion on how the situation with Android tests has changed in comparison with that of the year 2020. These are our personal conclusions based on the data we have gathered. In no way is it a full-scale study, but we can highlight some interesting points for sure.

The process of writing automated tests

90% of the teams we surveyed use native tests. Yet, we cannot argue whether there is a tendency towards more use of native tests in comparison with cross-platform solutions.

Teams that are just beginning to write automated tests are very much delighted with Kaspresso, as it saves them from a lot of pain. This is an absolutely honest opinion without any amount of bias on our part :). There are also teams that have already invested a lot of resources into their existing solutions for test automation based on Espresso and UI Automator. Ultimately, they either continue their work on improving the existing solution, or they gradually shift towards other options like Kaspresso, while still retaining some of their existing Espresso and UI Automator tests.

Test runner and reports

Native solutions like AndroidJUnitRunner and Orchestrator still remain popular. But if you are craving for something better, the ‘one instrument to rule them all’ kind of thing, look no further than Marathon. It is a rapidly developing test runner, the only downside of it being that it does not cover the infrastructure part in any way, unlike, for instance, Flank, which can work with Firebase TestLab (though it does not work with any other such tools).

When it comes to test reports, most of our Russian-speaking respondents give their preference to Allure. To be honest, it seems that today there is no better solution on the market.

Infrastructure

Teams that have long been in business have managed to build custom systems on their servers, which they independently orchestrate and maintain. But otherwise, the situation with infrastructure remains rather frustrating. Currently, there is no simple, flexible and scalable solution on the market. This explains why 23% of the respondents run their tests on just one local machine.

Of course, there is always Firebase TestLab, but it only works well for a small number of relatively simple tests. As the number of tests increases, you will inevitably want to have reliable reports, parallel test runs out of the box, you will want to view test run history and analytics, customize Docker images (e.g. configure proxies), and much more. All this you will have to somehow configure by yourself.

We have heard of a tool called emulator.wtf, but we do not yet know any cases of its successful usage. Another fresh tool is TestWise, whose team Sergei is now consulting. In the next article, we are planning to compare all the above-mentioned services.

Test automation process

The prevailing opinion used to be that automated tests are the exclusive responsibility of a test automation team. This paradigm worked for a time, but it initially led to such problems as a lack of synchronization between the application and its tests, throwing off responsibility, etc. We are now observing a fundamental shift in mindset towards the idea that automated tests are an integral part of an application and a necessary component of its quality. Developers are much more actively involved in the process of test automation, and tests are run as soon as it is possible, that is, on PRs. Besides, in order to improve the stability of tests, it is important to mock the backend, which definitely requires the active assistance of developers. Launching tests on PRs makes detecting the reason for their failure much easier, as the scope of changes is rather limited.

The End

Looks like it is time to say our goodbyes. Thank you for reading this article! Do not hesitate to write your questions and thoughts in the comments below; we will be pleased to have a discussion.

Many thanks to Christina Rozenkova for her help in translation!

1 Comment. Leave new

I maintain https://github.com/screenshotbot/screenshotbot-oss

This can integrate with most UI testing tools to create a better developer experience (automated notifications on Pull Requests, better image diffs, etc.)